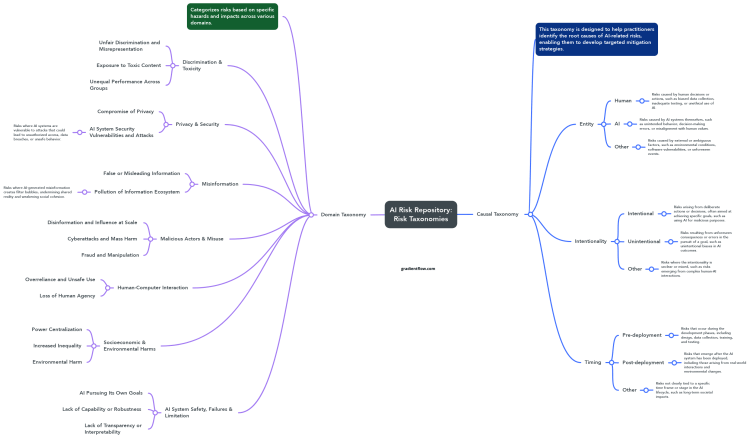

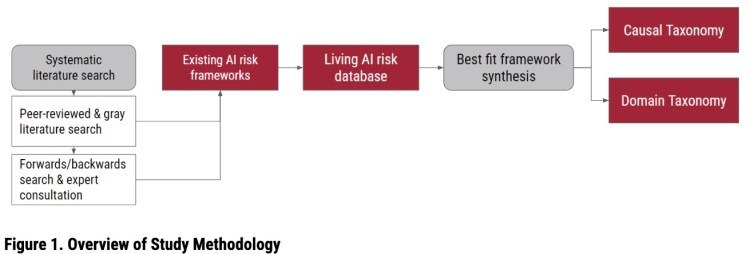

The AI Risk Repository is an interesting new initiative designed to address the fragmented and inconsistent landscape of AI risk frameworks. This comprehensive and accessible living database is designed to serve as a common frame of reference for understanding and addressing the risks posed by AI systems. Unlike fragmented frameworks that have long plagued the field, this repository synthesizes insights from over 43 AI risk classification frameworks, cataloging more than 700 distinct risks associated with AI. The aim is to equip AI teams, policymakers, researchers, and stakeholders with a unified tool to better manage the complex and evolving risks associated with AI technologies.

The repository is organized into two primary taxonomies. The Causal Taxonomy categorizes risks based on the entity causing the risk, intentionality, and timing, providing a nuanced understanding of risk origins. Meanwhile, the Domain Taxonomy covers seven domains and 23 subdomains, including privacy, security, misinformation, and AI system safety. This structured approach allows users to navigate and mitigate risks more effectively, ensuring that the AI systems they develop are robust, ethical, and aligned with societal values.

Bridging the Gap in AI Risk Understanding

The AI Risk Repository tackles a crucial challenge in the rapidly changing field of artificial intelligence by providing a comprehensive framework for identifying, understanding, and mitigating potential AI-related risks and vulnerabilities. Fragmentation has hindered the ability of AI developers, policymakers, and researchers to comprehensively identify and address potential dangers, leading to unforeseen consequences and ineffective risk management strategies.

The potential applications of this integrated approach are significant. For AI teams, the repository offers improved risk management capabilities, enabling better identification and mitigation of potential issues. This leads to the development of safer AI systems and more informed decision-making regarding AI deployment and management. Policymakers can leverage the repository to develop more effective regulations, contributing to more robust governance of AI technologies.

Furthermore, the repository serves as a valuable educational resource, helping to identify knowledge gaps and educate AI professionals on the complexities of AI risks. By proactively addressing these risks, the AI industry can build greater trust in AI systems and their applications, fostering broader acceptance and use.

The AI Risk Repository, while a valuable resource, has certain limitations. It necessitates continuous updates and may have gaps in coverage, particularly concerning emerging risks. The review process is not immune to human bias, and the classification system might oversimplify complex issues. Notably, the repository currently does not provide information on the impact or likelihood of each risk, which limits its practical applicability. Despite these limitations, the repository represents a step forward in promoting responsible AI deployment.

Integrating Risk Management into AI Workflows

Contextualizing the AI Risk Repository requires understanding its significance across multiple dimensions of AI risk management. Building upon a series of articles I’ve recently authored on topics pertaining to AI risk management and mitigation, this repository not only addresses existing gaps but also establishes a foundation for a more systematic approach to AI governance and alignment. To fully harness its potential, however, AI teams must seamlessly incorporate its insights into their development processes and organizational frameworks. This integration is crucial for maximizing the repository’s impact on responsible AI development and deployment.

Develop Robust AI Incident Response Capabilities: AI systems are inherently unpredictable. Organizations must develop comprehensive incident response strategies, including clear incident definitions, multi-dimensional monitoring, and pre-defined containment protocols. The AI Risk Repository provides a framework for identifying and categorizing potential risks, enabling effective incident management and minimizing operational and reputational impact.

Implement a Unified AI Alignment Platform: A fragmented AI ecosystem necessitates a unified platform to ensure AI systems operate within legal, ethical, and performance boundaries. The repository’s comprehensive risk categorization facilitates cross-functional collaboration and alignment. Such a platform, with workflow management, analysis, and validation tools, ensures AI models meet alignment criteria, reducing harmful outcomes and enhancing AI governance.

Prioritize Understanding and Mitigating Misuse Patterns: The evolving nature of generative AI brings growing misuse risks, from misinformation to privacy violations. AI teams must understand and develop countermeasures against these patterns. This detailed categorization of generative AI misuse risks aids in designing safeguards, including enhanced content moderation and robust protections against manipulation, fraud, and harmful content creation, protecting both users and AI integrity.

Strengthen Supply Chain Security: Securing the complex software supply chains upon which AI systems rely is crucial for maintaining their integrity and security. This recent article highlights AI infrastructure and supply chain vulnerabilities and provides a critical reference for implementing robust security measures. Teams should manage AI stack complexity, implement staged update rollouts, and go beyond compliance to ensure practical and effective security from development to deployment.

Foster Continuous Learning and Adaptation: The dynamic AI risk landscape demands an adaptive approach. The repository’s living nature enables teams to stay informed about emerging risks and adapt their strategies accordingly. This continuous learning process ensures risk management practices remain relevant and effective. By regularly updating practices and integrating new insights from the repository, teams can maintain robust defenses against evolving AI risks.

Related Content

- Unmasking the Dark Side: Real-World Misuse of Generative AI

- What is an AI Alignment Platform?

- AI Incident Response: Preparing for the Inevitable

- Securing AI: Understanding Software Supply Chain Security

- A Deep Dive into the Challenges of Generative AI

If you enjoyed this post please support our work by encouraging your friends and colleagues to subscribe to our newsletter: